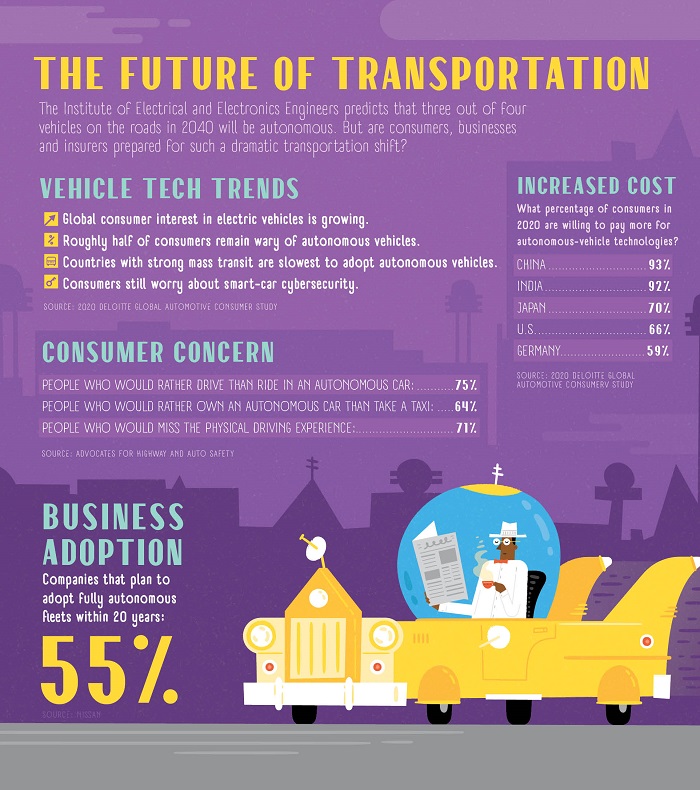

In 2020, safety concerns and regulatory hurdles are putting the brakes on the mainstream adoption of autonomous vehicles. (Illustration by Shaw Nielsen is from the November 2020 issue of NU Property & Casualty magazine.)

In 2020, safety concerns and regulatory hurdles are putting the brakes on the mainstream adoption of autonomous vehicles. (Illustration by Shaw Nielsen is from the November 2020 issue of NU Property & Casualty magazine.)A few years back, automotive technologists such as Tesla CEO Elon Musk were downright boastful about the speedy timeline in which they anticipated bringing fully autonomous vehicles to the mainstream marketplace.

Today, in light of serious safety concerns and myriad social and regulatory roadblocks, driverless-car adoption appears to be progressing at a slower pace.

"There was a sense, maybe a year or two ago, that algorithms were so good, we're ready to launch at any minute," U.C. Berkeley Electrical Engineering and Computer Sciences Professor Avideh Zakhor told CNBC in late 2019. "Obviously, there's been these setbacks with people getting killed or accidents happening, and now we're a lot more cautious."

That should be welcome news for the insurance industry, which has long been anticipating economic disruption from the rise of driverless vehicles.

Scheduling setbacks

CNBC reported recently that Tesla's self-driving cars are taking longer than expected to build and release. This is after Elon Musk told his investors in 2019 that the company would have 1 million self-driving vehicles on the roads by now. But Musk's dream continues to face delays. The "technology is coming to cars, and insurance, much more gradually," CNBC reported, adding that insurers such as Allstate, Progressive and Geico are taking a measured approach to covering such vehicles as the auto industry becomes ever more cautious about how far to push its autonomous-vehicle equipment as well as how much information to share with the public.

Some analysts argue that regulatory and legal hurdles are holding up the commoditization of autonomous vehicles more than technological hurdles and that this landscape will "hold back autonomous cars from becoming public anytime soon," according to Counterpoint Research. "The automotive industry is still a long way from manufacturing self-driving vehicles anytime soon with challenges remaining in consumer awareness, standardization, economics, regulations/compliances, privacy, safety, and security," writes Counterpoint Research Global Consulting Director Vinay Piparsania.

Testing uptick

It's important to note that vehicles with semi-autonomous functions are already widely available with such Advanced Driver Assistance Systems (ADAS) as cameras and sensors. Self-driving technology also includes radar, lidar, sonar and GPS. The Society of Automotive Engineers has determined five levels of automated driving functionality, with the lowest level (0) indicating the software has no sustained control over the vehicle, and the highest level (5) indicating the vehicle needs no human intervention to operate.

Waymo and other autonomous vehicle technologists have long argued that this technology has the capacity to make driving dramatically safer. Consider this observation from an experienced truck driver that tests big rigs for Waymo Via, the company's trucking division: "By working with the engineering teams and sharing all about truck behavior and the rules of the road, I'm helping the Waymo Driver see and learn what I have. It's my job to impart the lessons I've learned the hard way so that the Waymo Driver is the safest it can be. That is the largest impact I can have — knowing that society will benefit from my lived experience for years and years to come."

After pushing back its original timeline, GM Cruise announced in October that it plans to begin testing unmanned autonomous vehicles in San Francisco after the company received a permit from the California Department of Motor Vehicles to remove human backup drivers from his test vehicles. Waymo, Autox Technologies, Nuro and Zoox all have received similar permits.

"Before the end of the year, we'll be sending cars out onto the streets of SF — without gasoline and without anyone at the wheel," Cruise CEO Dan Ammann wrote in a Medium post. "Because safely removing the driver is the true benchmark of a self-driving car, and because burning fossil fuels is no way to build the future of transportation."

Waymo also recently announced plans to expand the availability of its ride-hailing service to the public around its testing area in Arizona. The California-based tech firm owned by Google's parent company (Alphabet Inc.) is generally considered a pace-setter in the driverless vehicle space. It's been using a 100-square-mile area in Phoenix as its testing site since 2016.

"We're just ready from every standpoint," Waymo CEO John Krafcik told TechCrunch about launching driverless ride-hailing cars. But for now, even Waymo cars with the technology to operate without a human will host trained operators. "We've had our wonderful group of early riders who've helped us hone the service, obviously not from a safety standpoint, because we've had the confidence on the safety side for some time, but rather more for the fit of the product itself."

And while Krafcik appears to have the confidence of a polar bear in a snowstorm regarding the readiness of his company's technology, neither society nor the risk-averse insurance industry seems ready to completely embrace the idea of robotic cars and trucks on the roads. Consider this comment to the most recent Waymo news by a TechCrunch reader: "Bad product. Been 'sharing' the road with these moronic vehicles since they got here. You would have to be an idiot to get in one of these without a safety driver."

Waymo, which declined to provide an interview for this story, also appears to be working overtime to dissuade suspicion around its freight delivery business. To that end, the company shared publicly in October some previously-undisclosed details about its driverless truck technology.

"The foundational systems are all the same," Boris Sofman, Waymo's director of engineering for trucking, told Fortune around the same time the company published a statement on its website stating that driverless freight-truck technology essentially mirrors its driverless car technology.

Regulatory hurdles

Technologists working to further driverless-vehicle development still have mountains to climb when it comes to proving to regulators and the public that such cars and trucks are ready for prime time.

The Insurance Institute for Highway Safety acknowledged in June that driver mistakes cause "virtually all crashes," which could make automation a game-changer in road safety. But the IIHS, which is a six-decade-old nonprofit funded by auto insurance companies, also determined that self-driving vehicles that operate "too much like people" would only prevent about a third of crashes.

"It's likely that fully self-driving cars will eventually identify hazards better than people, but we found that this alone would not prevent the bulk of crashes," said IIHS Vice President for Research Jessica Cicchino.

Cicchino co-authored a study that analyzed police-reported crashes and found that nine out of 10 crashes were caused by some element of driver error. The study determined that only a fraction of crashes could be prevented by an automated vehicle's human-imitated functions. Additional accidents would require reactivate software "programmed to prioritize safety over speed and convenience."

This bolsters previous research conducted by the National Highway Traffic Safety Administration that determined 90% of all crashes are caused by human error.

"Building self-driving cars that drive as well as people do is a big challenge in itself," added IIHS Research Scientist Alexandra Mueller, lead author of the study. "But they'd actually need to be better than that to deliver on the promises we've all heard."

As part of the study, IIHS referenced a widely-publicized 2018 fatal accident in which a driverless Uber failed to quickly recognize a pedestrian walking on the side of the road at night in Tempe, Ariz. Elaine Herzberg, 49, proceeded to cross the road, and the car was unable to respond quickly enough to avoid hitting her.

"Our analysis shows that it will be crucial for designers to prioritize safety over rider preferences if autonomous vehicles are to live up to their promise to be safer than human drivers," Mueller concluded.

Such issues are certainly of concern to insurers, which may be why Waymo has been somewhat hushed about its insurance arrangements, a public relations strategy that's raised eyebrows among consumer advocacy groups. Tesla, on the other hand, responded to coverage challenges for its vehicles by launching its own insurance products, which are currently available in California.

"I think these companies are so litigation-averse that they will reveal details but only in confidential circumstances," James McPherson, a San Francisco attorney who founded the consulting company SafeSelfDrive Inc., told The Recorder in late 2018.

Insurance industry analysts predict insurers will need to develop new and creative commercial and product liability coverages for fully autonomous cars and trucks. When such vehicles do arrive, they could spur a serious constriction of the personal auto insurance market. A 2017 report released by Accenture and the Stevens Institute of Technology found an $81 billion opportunity between 2020 and 2025 for insurers to develop coverage options for autonomous vehicles. Researchers predicted that 23 million fully autonomous vehicles will be traveling U.S. road and highways by 2035, a shift that could coincide with a $25 million drop in auto insurance premiums.

Less like humans, please

Driverless cars have been touted by proponents as the safest way to counter the many human errors that cause accidents. The Insurance Institute for Highway Safety, however, reports that driverless vehicle technology that simply mimics human behavior will likely cause just as many crashes as people do.

IIHS researchers categorize the types of driver-related accidents as follows:

- "Sensing and perceiving" errors included things like driver distraction, impeded visibility and failing to recognize hazards before it was too late.

- "Predicting" errors occurred when drivers misjudged a gap in traffic, incorrectly estimated how fast another vehicle was going or made an incorrect assumption about what another road user was going to do.

- "Planning and deciding" errors included driving too fast or too slow for the road conditions, driving aggressively or leaving too little following distance from the vehicle ahead.

- "Execution and performance" errors included inadequate or incorrect evasive maneuvers, overcompensation and other mistakes in controlling the vehicle.

- "Incapacitation" involved impairment due to alcohol or drug use, medical problems or falling asleep at the wheel.

IIHS concluded that the complex roles autonomous vehicles must perform paired with risks linked to vehicle-occupant preferences create a conflict when it comes to safety management.

Digital vulnerability

Particularly, as 2020 became a hacker's paradise with the uptick in remote workers due to the global pandemic, cybersecurity concerns surrounding autonomous vehicles are rising. Hackers could potentially tap an autonomous vehicle's computer systems to acquire private driver data or cause other harm. And this concern is but one raised by autonomous vehicle critics. Other potential obstacles to bringing these cars and trucks to market in a more mainstream way include:

- Concerns that AV communications will fail to work as well in crowded inner-city environments.

- Vehicle software and computer components could be highly sensitive to weather extremes.

- AV sensors have been inconsistent in detecting large animals.

- Dated map data could throw off an AV's navigation.

- Mass manufacturing of which vehicles and technology could introduce its own quality-control problems.

- Government regulation and product development may not happen concurrently.

The list above only begins to touch on the various moral and ethical questions raised by the potential mainstreaming autonomous vehicles.

Even so, the National Conference on State Legislatures reports that the majority of states in the U.S. are currently working on some type of autonomous vehicle legislation